Using the SSIS transformation script component in an ETL

Notice! Warning!

I�m not using this method anymore. Lately I have been using Jamie Thompson�s superior method (see link below). He talks about using a Lookup (or Merge Join) together with a Conditional Split component. The method on this web page is still good for some odd scenarios but, for most common ETL�s I highly recommend Jamie�s method.

Written by: Greg Van Mullem

Date: September 13, 2006

Updated: March 29, 2009

This web page is part of a collection of web pages containing difficult to find technical information about Microsoft SQL Server 2005 Standard Edition. Click here to go to the main index.

The algorithm documentation:

This example shows how to ETL (Extract Translate and Load) customer records from a source (staging) table to a destination table. It uses a standard update or insert algorithm. My code is a very loose adaptation of the unrelated code samples found in these Microsoft web pages. You might be able to get a little extra understanding from looking at them.

Creating a Destination with the Script Component

Developing Specific Types of Script Components

The algorithm extracts each record from the source table and looks it up in the destination table. If the record does not exist in the destination then it is inserted. If the destination record does exist then it will be compared to the source record. If there is a difference then the record will be updated. If the records are exactly the same then an update statement will not be issued. Deletes are not allowed with this data so I�m skipping that part. The algorithm does not rely on date fields to detect changes.

During the ETL I will use counter components to calculate the following values that will be written to a custom logging table.

1. RowsComplete; Count of the rows extracted from the source table.

2. RowsInserted; Count of the rows inserted.

3. RowsUpdated; Count of the rows updated.

4. RowsNotUpdated; Count of the rows where no action was taken. They matched exactly in the source and destination.

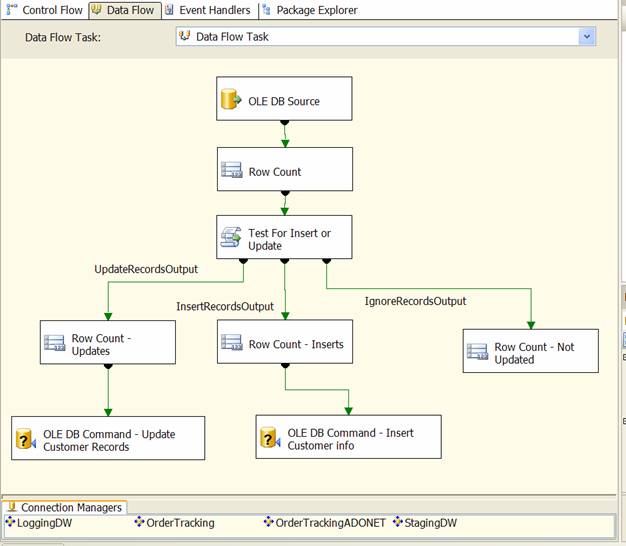

Here is my data flow screen:

The �Test For Insert or Update� task is where the magic happens. It is a �script component� configured with the �Transformation� option.

I have 4 connection managers:

1. LoggingDW is the custom logging database. The counter variables will be written to it in a later control flow task.

2. StagingDW is the source database. The customer records were inserted into it by a previous ETL that got them from our AS400.

3. OrderTracking is the destination database. This connection uses a �Native OLE DB\SQL Native Client� provider.

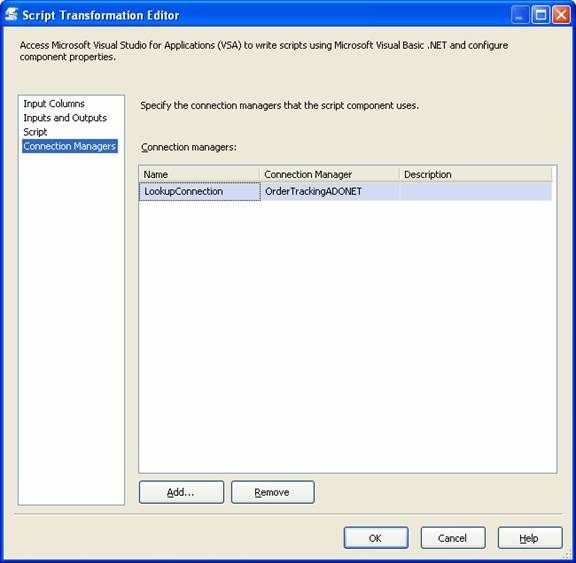

4. OrderTrackingADONET is used by the script component to look up records in the destination table. This connection uses a �.Net Provider\SQLClient Data Provider� provider. Depending or your particular package you may be able to use this as the only destination database connection manager.

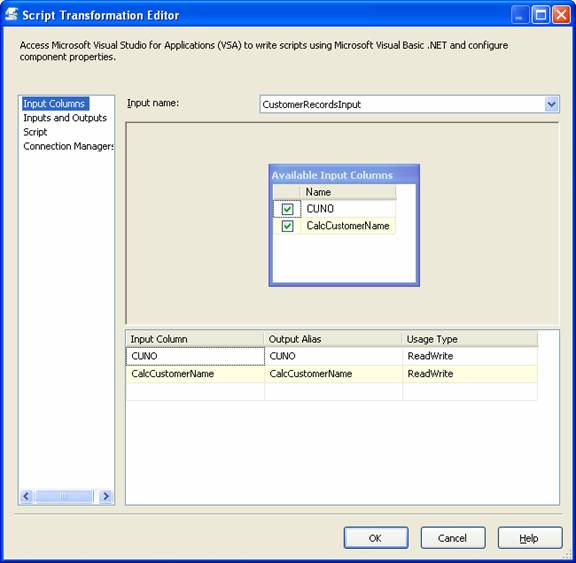

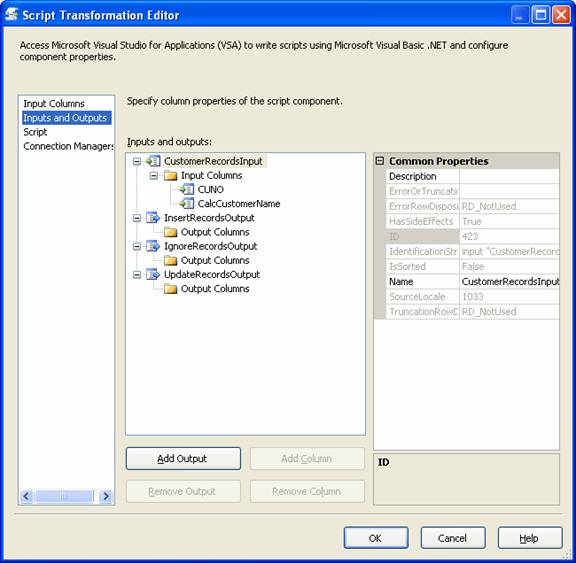

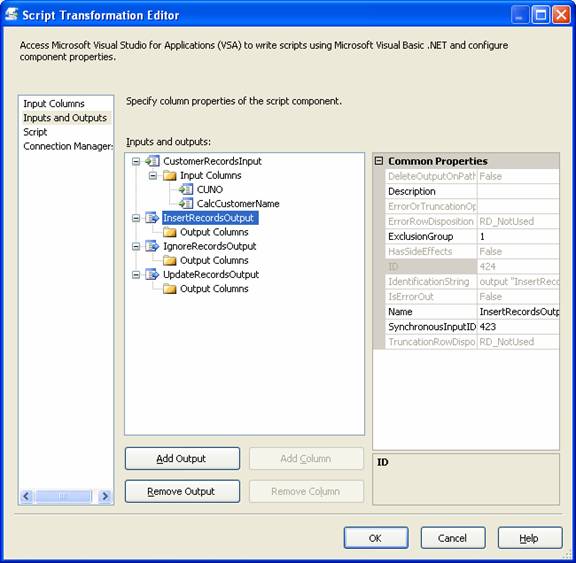

Here are complete screen shots of the Script Transformation Editor:

1. The usage type for all input columns must be ReadWrite. CUNO is an integer primary key and CalcCustomerName is a string (varchar). Note: this data is coming from a mainframe style AS400 database so I�m using some odd field naming conventions (they actually make a little sense in the grand scheme of things).

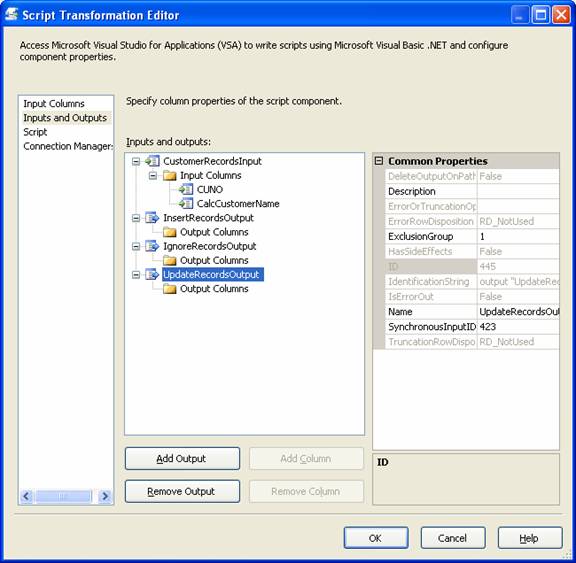

2. I have configured 1 input and 3 outputs. I did not configure any output columns. They will be auto created. Note that the Input ID is 423.

3. All of my outputs are configured exactly the same but with different names. I have set the ExclusionGroup to 1. I have also set the SyncronousInputID to the input ID value from the input configuration (see the previous screen). The SyncronousInputID will be different for every component input you create. Please read the Microsoft help documentation for these 2 properties. They are critical to making the script work.

4. Here is my IgnoreRecordsOutput properties.

5. Here is my UpdateRecordsOutput properties.

6. Here is the Connection Managers screen.

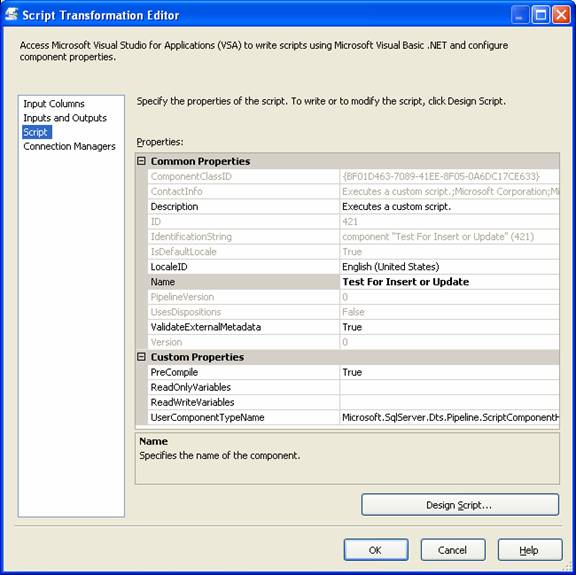

7. Here is the script screen. The Name property is the only thing I changed in here.

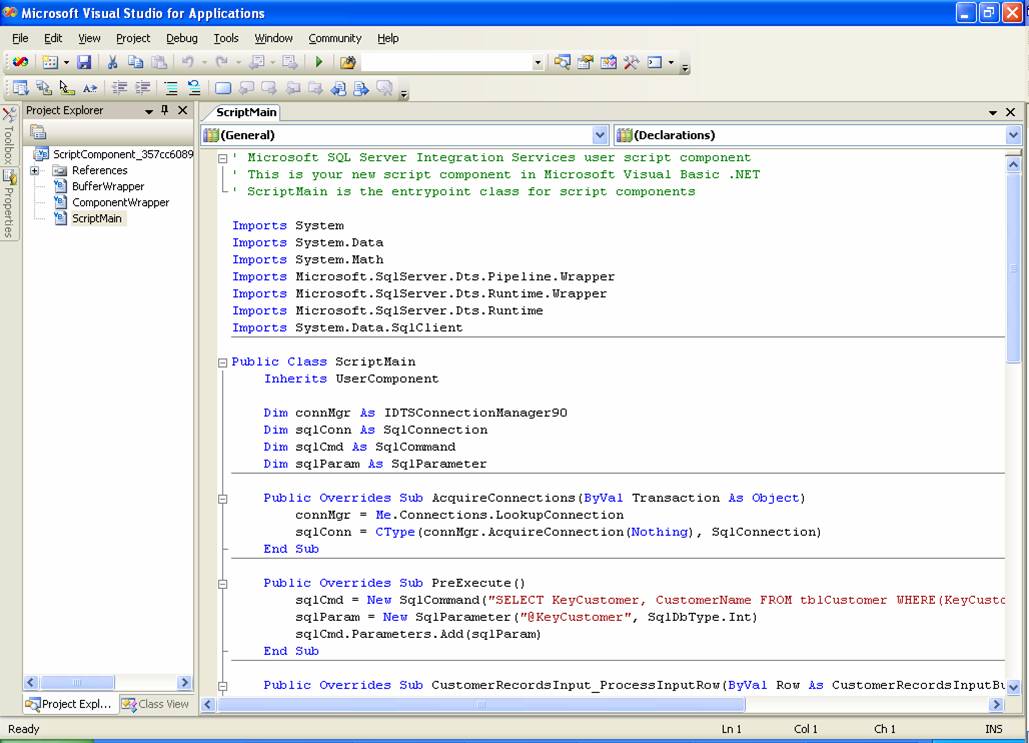

8. After all this configuration is done you can press the �Design Script�� button. Here is the script editor that pops up.

9. Here is my complete source code for the script:

' Microsoft SQL Server Integration Services user script component

' This is your new script component in Microsoft Visual Basic .NET

' ScriptMain is the entrypoint class for script components

Imports System

Imports System.Data

Imports System.Math

Imports Microsoft.SqlServer.Dts.Pipeline.Wrapper

Imports Microsoft.SqlServer.Dts.Runtime.Wrapper

Imports Microsoft.SqlServer.Dts.Runtime

Imports System.Data.SqlClient

Public Class ScriptMain

Inherits UserComponent

Dim connMgr As IDTSConnectionManager90

Dim sqlConn As SqlConnection

Dim sqlCmd As SqlCommand

Dim sqlParam As SqlParameter

Public Overrides Sub AcquireConnections(ByVal Transaction As Object)

connMgr = Me.Connections.LookupConnection

sqlConn = CType(connMgr.AcquireConnection(Nothing), SqlConnection)

End Sub

Public Overrides Sub PreExecute()

sqlCmd = New SqlCommand("SELECT KeyCustomer, CustomerName FROM tblCustomer WHERE(KeyCustomer = @KeyCustomer)", sqlConn)

sqlParam = New SqlParameter("@KeyCustomer", SqlDbType.Int)

sqlCmd.Parameters.Add(sqlParam)

End Sub

Public Overrides Sub CustomerRecordsInput_ProcessInputRow(ByVal Row As CustomerRecordsInputBuffer)

Dim reader As SqlDataReader

sqlCmd.Parameters("@KeyCustomer").Value = Row.CUNO

reader = sqlCmd.ExecuteReader()

If reader.Read() Then

'do all field comparisons here to determine if

' the record changed since the last ETL.

If (reader("CustomerName").ToString() <> Row.CalcCustomerName) Then

Row.DirectRowToUpdateRecordsOutput()

Else

Row.DirectRowToIgnoreRecordsOutput()

End If

Else

Row.DirectRowToInsertRecordsOutput()

End If

reader.Close()

End Sub

Public Overrides Sub ReleaseConnections()

connMgr.ReleaseConnection(sqlConn)

End Sub

End Class

10. The End